posted under category: Software Quality on February 13, 2020 by Nathan

Maybe you don’t remember, so let me remind us all how hard programming is.

I have four children. Believe me when I say that I have been trying to get them to program from a very young age, and so far it’s not all roses and candy treats. Usually there is an amount of familiarization that a flourishing person needs to have with a subject in order to comprehend it fully. Learning how to read, for example, takes sounding out letters, followed by dick-and-jane books, followed by frustration, some time off (usually months), and then retrying, usually with a higher amount of success. Learning to read is a long process and doing it well involves creating many new brain pathways.

Imagine trying to teach your parents to program. With only a few exceptions, there’s a very high chance they simply won’t be able to get it.

This is something I wrestle with, and recently I’ve come to the conclusion that the standard human brain can’t easily grasp the concept of templating. Instead of building something, we programmers are building something for someone else to build something. The more meta up that chain our brains can go, the better a programmer we tend to be. Problems are always solved on a higher level than they were created.

Let’s say that we learned programming, we make our first application, we send it out for our users to try it, and what happens? It doesn’t work! We thought of every conceivable possibility. The problem was that we didn’t conceive of enough possibilities.

“Software is not governed by Moore’s Law; more like Murphy’s Law”

– Douglas Crockford

Even when we find a good solution, that doesn’t mean we’ve reached programming bliss.

“Some people, when confronted with a problem, think “I know, I’ll use regular expressions.” Now they have two problems.”

– Jamie Zawinski

Sometimes progress is very hard and it can literally take years to get past our hurdles. My friend Ben wrote this a number of years ago:

“Every time I think I am making progress, I come to realize that I only have more confusion over the ‘right’ way to implement something”

– Ben Nadel

But don’t forget, programming is very difficult. It can be near-infinitely complex. Think about this for a couple minutes:

“Computer programs are the most complex things that humans make. Programs are made up of a huge number of parts, expressed as functions, statements, and expressions that are arranged in sequences that must be virtually free of error. The runtime behavior has little resemblance to the program that implements it. Software is usually expected to be modified over the course of its productive life. The process of converting one correct program into a different correct program is extremely challenging.”

– Douglas Crockford

If you’re programming, you are doing something extremely intricate. You are valuable because most people cannot do what you are doing. But wait! Your value isn’t only in programming, you are especially valuable because of your ability to put business processes to computers, and to explain these computer systems back to the business.

(Discuss with Disqus!)

posted under category: Software Quality on February 7, 2020 by Nathan

One of the oldest and rawest forms of software measurement is the inimitable count of the number of lines of code. Let’s talk about that.

“Measuring programming progress by lines of code is like measuring aircraft building progress by weight.”

-Bill Gates

I work at an airplane manufacturer. We actually know the final delivery weight of an airplane, even adjusted for paint and seating arrangements, and in airplane manufacturing, knowing the current and final weight could help. On the other hand, a work of software is done when all the features are complete and the bugs are worked out, or better yet, when we know it will make or save enough money to start selling or using.

Lines of code differ drastically between systems. Choice of programming language is one of the primary reasons for a drastic change in the lines of code. One lower level language may allow us to implement a solution in 100 lines of code, while a higher level one has it as a built-in-function - one line. That doesn’t mean either language is worse, just different. Typically we see higher level languages develop applications much faster and debug easier thanks to the smaller codebases, but there is a tradeoff in performance and resource usage.

Another distinguishing factor is what function the application is expected to perform. It’s hard to compare a shopping cart system to a manufacturing system, they have completely different uses and thus a line count to compare them does essentially nothing. Perhaps one application is more straightforward and requires less code, even if it produces more in some kind of measurable output.

Then there’s the people problem. A developer on one system may have clever tricks that reduce the line count. This could make the codebase equally better or worse, generally depending on the cleverness of the solution, and thus the cleverness of the programmer. We often think we are smart. 90% of programmers believe they are above average, thanks to the cognitive bias called illusory superiority.

This then leads us to the yin-yang problem. More features need more lines of code, however there is a tax because more lines of code become harder for the software writer to comprehend and modify successfully. This is the exact nature of software complexity, and leads to the introduction of bugs, the creation of testing frameworks, the discussion of software quality, and the entire software industry. Remember that no lines of code means no bugs, no rework, no expensive developers, and no security hacks.

Next, we have to consider how the number of lines are calculated. Does that count include 3rd party libraries? Does it include blank lines and whitespace? Does it include comments? We can make a strong case both that all of these should or should not be included.

Lines of code does tell one one important thing however. It tells us how many lines of code are in the application’s codebase. It can give us a general order of magnitude for what we will find. It can give us the feeling of largeness, especially when we compare file-by-file (though typically a byte count would suffice). That’s it. Number of lines of code can tell us how many lines of code there are. That is all.

(Discuss with Disqus!)

posted under category: Software Quality on February 1, 2020 by Nathan

Why do we want to measure software?

Software measurement is what we software developers do instinctively when we start a new job, join a new project, or enter a new team. It’s critical for us to understand the scope of a product so that we can learn our boundaries and understand our place. Understanding software is one of our best skills - the others being the ability to explain it, and the ability to write it (okay, there may be more to it). Our fight against the chaos of the unknown requires that we split up what we can observe into categories that we can understand.

Many times I’ve been give a legacy codebase without a proper introduction. That’s the worst feeling, and was my motivation for writing a big post on how much documentation you should have. If we could somehow bottle up this knowledge and pass it on to our colleagues, it could be very beneficial to future maintainers, helping to lower the long tail of operational cost on a product, and maybe even stave off the inevitable retirement of an app and the cost of rewriting it for a long time. And that’s our goal - it’s to remove cost, not to add it. It’s to understand the world and to be able to explain it.

Good measurements of software can bring a valuable understanding to us and to the people paying our bills. Is the software tiny and easy? That’s good to know! Is the software humongous and complex? That’s good to know! Is the software terrible and in dire need of help? That’s good to know!

How do we measure software?

Answer that and you’re likely to get a grant. The truth is, software isn’t specifically measurable, for the same way we can’t make software perfect. Sure, at a certain small size we can verify that it works as expected, but, you know, can it read mail yet?

Every program attempts to expand until it can read mail. Those programs which cannot so expand are replaced by ones which can.

Zawinski’s Law

That’s tongue in cheek. These days we could change Zawinski’s Law to interacting with social media or running as a native mobile app.

Our trouble with measuring software is that measuring by any single logical metric is a sure way to miss the mark altogether. Software measurement has to be on a different level, in fact, many different levels. Measuring software can only be done when we take in a system holistically. That means understanding it on a much broader level.

As soon as we measure the size on disk, somebody’s in there changing things. As soon as we measure the memory footprint, someone logs in, adding data. As soon as we measure the performance, another proces ties up the CPU and throws off our measurements. Software is soft, and changes too frequently to make accurate measurements that matter. Software is fluid, moving from disk to RAM, and transmitting across networks. It doesn’t take much of a program to utilize all of the hardware given it. It seems that simple metrics just won’t be enough to measure software. Measuring software by its metrics can give a picture of the whole, though, and there’s value in that.

When thinking holistically, one of the aspects that would need to be measured is the quality of a work. We need to measure the quality to complete this picture.

How can we measure software quality?

There are more intangible ways of measuring software, such as number of customer requirements, size or activity of the product’s backlog, and number of users. In particular, we can concentrate on the number of logged defects, if they have been logged at all. None of these are a holistic measurement of the quality of a piece of software, but they do help to paint the broad picture.

I believe that quality is a collection of mostly-intangible aspects. This means quality cannot be measured with a number or a picture. I think maybe a dozen pictures and user interviews and developer deep dives could help complete a mental model of the quality of an application.

There are quality metrics, such as the amount of code coverage (amount of code that is covered in unt tests). That’s a potential measurement of quality, but it could still be a terribly built app with great unit tests. Cyclomatic complexity is one of my favorites, having built a McCabe Indexing tool of my own a few years back. It tells you how complex your CFML files and functions are, and can show line-by-line where your most complex areas are. Another one I’ve looked at is function point analysis, which can map the central parts of an application by usage.

Once a software analyst understands the system holistically, they should be able to express that holistic idea to others. Of course this is a breakdown of its own type, and relies on the communication skills of the analyst.

What are the aspects of software quality?

I’ve compiled this list that I’ll go over at a later date, but feel free to suggest more. Quality is:

- Completeness

- Conciseness

- Consistency

- Debugability

- Efficiency

- Extensibility

- Maintainability

- Client Portability

- Server Portability

- Reliability

- Security

- Structuredness

- Testability

- Understandability

- Usability

(Discuss with Disqus!)

posted under category: Software Quality on January 30, 2020 by Nathan

The official software crisis was about computing power versus software power in the 1960’s. Hardware was outpacing software, which led to the need for higher level languages, better development methodologies, and more workers to write programs. Ask anyone in their later years who had been a programmer for their career and you may learn more than an earful about these problems. Something ongoing from the old software crisis were some of the signs and symptoms that we see today:

- Software is over budget

- Software is delivered late

- Software is low quality

- Programs run slowly

- Results don’t meet requirements

- Code is unmanageable

- Sometimes applications are never completed, vaporware

We see a good amount of that today. Personally, I see it all over enterprise software, and frequently in outsourced programs – that’s another topic for another day. I also see it in inexperienced programmers, especially when they are allowed to work alone, and sometimes in development done outside of normal software companies and departments. For real though, sometimes we even see it in more experienced looking teams as well.

The struggle is real. A lot of this software crisis is still in effect. It just doesn’t act like it did back before I was born.

Higher level languages help the crisis because they can be more expressive and produce better applications. This is why JavaScript is great - at great expense to the CPU, JS executes in a VM in a browser in a windowing OS, many levels removed from actual hardware. CPUs used to double in throughput every 18-24 months (Moore’s law), but those days are mostly gone as we struggle against the limits of atomic sizes. That means our higher level programs now have to act like lower level programs. This is why JVM and .NET runtime applications succeed, and why Chrome’s V8 engine has been so successful. They can run abstracted languages as if they were one layer closer to the hardware.

Better software lifecycle processes typically help with late, over-budget software and with missed requirements. I personally have seen how Agile was a giant victory over the 6-month deployment cycle in years before. Tools like CI/CD enablers can put working code in front of our users even faster. Unfortunately, these processes don’t remove the problem of low-quality code, though they help mitigate many of the hurdles.

Isn’t that just software life though? We struggle through it every day in order to make the world a better place. That’s the programming life. That’s what we get paid to do. Maybe it’s kind of the dark side, sure, but that’s where we live. What do you think? Is the software crisis still a term worth using today?

(Discuss with Disqus!)

posted under category: Software Quality on January 26, 2020 by Nathan

I really enjoy a lot of the content on Y Combinator’s Hacker News. One recent article that came up was Why do we fall into the rewrite trap?, which was a great look into the anti-pattern of software rewrites instead of thoughtful refactoring.

The comments tend to be as good as the articles, for example, one commentor mentioned Theseus’ Gambit - a philosophical engineering question about replacing every plank on a ship, at what point is it a new ship? This is a great question when it comes to software rewrites. Isn’t a refactor somehow creating a new work? Or is it only a rewrite if you start over?

So many comments agreed with the author and added their own notes. One wrote “rewriting a codebase is a lose-lose proposition: I’ll never suggest or recommend it again.” Others mentioned the need to rewrite based on technologies, like old Flash applications.

A few years ago, I was on a 3-person team asked to upgrade and visually update one of our applications. At one look, you would have guessed it was built in 1996. It was abandoned and disavowed by all the previous programmers. We looked at the code and went over the security, and decided that there was absolutely no part of it that could move forward. Management had been sold on a refactor but we needed a rewrite. We are determined to do what’s necessary, so we kicked it off. Our project manager collected requirements and relayed some of our ideas, then my friend Shawn and I worked through the new database needs, new CSS frameworks, new JavaScript frameworks, and the new security system. In a short handful of months, we had a much improved replacement for the old system, ran the implementation plan, migrated all the data, and turned off the old site. It was a big win for the business, and for #TeamRewrite.

Not long after that, #TeamRefactor got a victory on a desktop app - it was a bigger team and while the code was bad - I mean like, a complete misunderstanding of OOP - we decided to refactor a lot of it instead of starting over. It was a somewhat political decision because we didn’t want to make the previous dev team angry. Unfortunately, they were equally offended that we were planning a refactor. Some drama took place. The refactor effort took a long time. We finished it eventually, but we ended up with an app that we still didn’t like (it was better, and many of the initial underlying problems were fixed, but…), our friends didn’t like us anymore, and a lot of time was wasted - far more than we expected. I would vote that refactoring this app was an overall failure.

A little later I was given charge of an IT app that was built only 6 months earlier and still in active development. Its foundation was in ASP.NET WebForms and VB.NET. After I gagged a tiny bit, I swallowed my pride and tried to hunker down and start on improvements. Backing up, our department has been a C# MVC group for some years, and when we follow one path, we try to make the rest of our choices as vanilla, gravy, plain, and standard as possible. This helps with ramping up new employees. The group that started this app chose .NET but then went with every other different choice, probably just based on familiarity. I took a task off the backlog for creating a mobile-friendly version, which turned into a reimplementation of the entire app, running much faster, was natively responsive, perfectly printable, and ended up being the spearhead of the larger vision for version 2: a complete rewrite. I did it with tact, not offending any parties, announcing my successes and advertising my work while helping the product team make the right choices on the vision. The end result was a much better product that fell closer in line with department goals. Another point for #TeamRewrite!

Not long after, I was handed a C# MVC + React app. The current steward was about to leave the company, so I had a transition week and then it was mine. The app was fine, there was nothing really wrong with it. On day one of independence I had about 30 commits to fix a bunch of things that I thought needed changing but didn’t want to do it and stomp on my former co-worker’s code and feelings. Very quickly, a number of things started working better, I caught up with all the changed from the data team that worked out of our Seattle office, I refactored some things that probably needed it, created my own backlog, and pretty much got bored about 3 weeks in. This is when I started to rewrite the UI in Vue.js. There were some requirements coming down that would have me using Redux, and I do not want to use Redux again, also I couldn’t figure out React-Router’s chunk splitting (I guess it depends on React.lazy()), and I don’t like the React runtime’s bloated file size. On the other hand, I’m a huge fan of Vue, which is smaller, faster and I just like it more. I’m opinionated! I was pretty sure I could keep up with the React app changes and create the Vue version (I could), chalk up the UI change from React-Semantic-UI to Vuetify (Material) as just being a visual update (I did), and get it all done in 4 weeks (I didn’t). It took me 6 weeks. I floated it with the data team and they were positive on it, so I pushed it to the users… they barely noticed. After a while I got some comments about how much they liked the changes. The result was an application that ran a little faster, downloaded quicker, was more maintainable, could easily support all the new requirements, and I just plain liked it more. #TeamRewrite for the win. Was it worthwhile? Maybe, until I consider the pain I would have to go through for implementing Redux, then it was definitely worth it!

So what have we learned?

Maybe that Nathan just likes to rewrite applications for fun. Am I an anomaly if I keep having a lot of success with it? I work on internal enterprise systems; I wonder of the applications I keep inheriting are just really, terribly bad.

For the record, I’ve worked on plenty of systems that I did not suggest rewriting. I have one app that I inherited and have kept alive for twelve years now. It’s been through some major refactors, but it’s been evolutionary the whole time. Of course now it’s time for a rewrite - haha! It’s ColdFusion after all, and we don’t do that anymore. How did this application avoid Nathan’s swift justice against poorly coded enterprise apps? Well it was very well structured from the start - strong MVC roots, layered architecture, it was built on a firm foundation. Maybe #TeamRefactor should get a few more points.

While refactoring is typically less expensive, I have a list of reasons that will ping that rewrite bell in my head.

- When the existing application is very obviously, truly a disaster

- The UI looks like it’s 20+ years old

- When the existing technology platform is inadequate

- When upcoming changes require significant rework

I remember reading - though I can’t recall where - a hard figure. It was something to the extent of: when more than 60% of an application or component needs to be refactored, that application or component should probably be rewritten.

I really don’t prefer to rewrite, and neither should anyone, however, I believe that rewrites are much more possible than we are led to believe.

(Discuss with Disqus!)

posted under category: Software Quality on March 2, 2019 by Nathan

People don’t like to talk about real standards for commenting your code. They give you abstract thoughts and ideas, then back up and say just do what your team decides to do. Certainly consistency is important, but what if you are consistently bad? This is why I like Code Complete by Steve McConnell. Steve lays down hard figures. You may not agree with him, but he will get you to form an opinion, even if it’s contrary. So in the same spirit, let me talk about what I think you should comment.

First off, I want to remind you that comments are at a different level than the code. As Code Complete says: “Good comments don’t repeat the code or explain it. They clarify its intent. Comments should explain, at a higher level of abstraction than the code, what you’re trying to do.”

-

Comment every code file

Classes should describe what they are, as simply as possible. View templates should explain their purpose. I think commenting directories with a readme is also nice.

-

Comment every method

Preferably, comment your methods within a metadata block that can be used to generate documentation for you later, if your platform supports it.

-

Comment to describe ambiguous variables or acronyms

Typically these may be endline comments on the variable declaration to avoid confusion.

-

Comment on blocks of code

Again, comments should be on a higher level, and should not detail /how/ a block of code works, but should explain why a block of code is here and what its purpose is.

-

Comment surprises

Point out when you are doing something non-standard, or something particularly clever that may be hard to follow.

What do you think? Not enough or too much? What am I missing?

When Not to Comment Code

Almost as important as when to comment, is the inverse.

- For starters, don’t comment if you don’t have anything to add. Don’t comment just because you don’t see enough comments in the code you are looking at.

- Don’t comment if you don’t understand the context and the intent. Your comment will just be inaccurate.

- Don’t comment to explain every variable. Not unless it’s really necessary.

- Never comment a file change history. That’s what source control software (like Git) is for.

- Don’t comment descriptions on obvious variables.

User_Name; // This is the user name

I, Nathan Strutz, IT and computing professional for over two decades and senior level programmer at the world’s best aerospace company, being of sound mind, fully deputize you with the rights, the permission, and the duty to delete comments that match these parameters. The world will be a better place when these useless code comments are gone.

(Discuss with Disqus!)

posted under category: Software Quality on February 21, 2019 by Nathan

Five years ago, my friend Shawn and I began a discussion on documentation. He was new to my project, we’ll call it Rhubarb 2000, and bemoaned the lack of documentation. I hadn’t document enough for my new developer, and I’m guilty of charging this technical debt that my friend had to pay interest on.

So we asked the question: How much documentation is enough? It sat on our whiteboard for weeks. We would talk about it in the afternoons, but accomplish nothing. Eventually, we came up with a number of ways to answer the question.

So, what do you think? Given the transition of an application between two developers in the win-the-lottery scenario (also called the hit-by-a-bus scenario, but let’s think positively), what is the right amount of documentation to have?

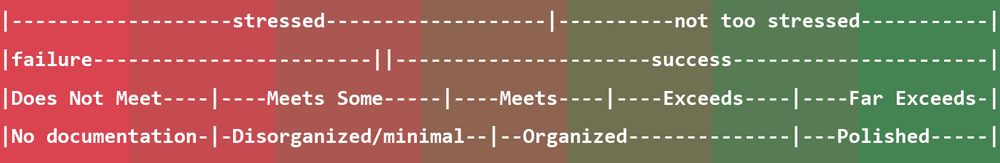

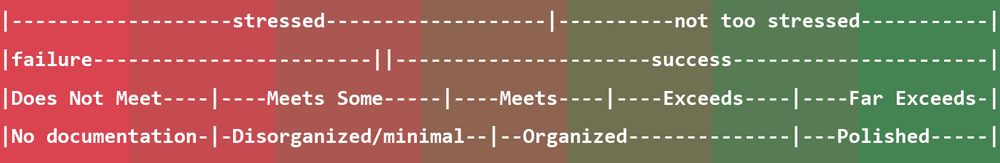

The first successful attack at the question we had was to break it down to two atomic categories: failure and success. Failure meaning it was not a successful transition; the new developer had trouble understanding the new system and grew to loathe it because it didn’t make sense. Success meaning the transition was successful; the old developer did not need to be contacted and the new developer experienced minimal trouble understanding the what-where-when-why-how’s of the new system because the available documentation was sufficient.

This black and white view was good way to answer the question - we want transitions to be painless. More than that, we want it to be stress free for our imaginary future friend. Many companies don’t have a minimal amount of documentation. My company’s minimal documentation standards are just some boilerplate stuff about processes, which do nothing to qualify for a stress free transition.

Eventually we came to a better, multi-tiered answer. It’s simple. There are five levels taken from elementary grading or performance management that we can judge ourselves on: Does Not Meet, Meets Some, Meets, Exceeds, and Far Exceeds. This way, we can set our own metrics on meeting the expected documentation. It’s a framework for judging amounts of documentation that anyone can play.

Another parallel scale we came up with to sub-classify documentation was: no documentation, disorganized or minimal, organized, and polished. This judges the existing documentation on a scale of bad to good. All together, I think it looks like this.

Minimum Viable Documentation

Let’s say we’re doing our jobs pretty well, and we create at least the amount of documentation that we are required to. That leaves us with some process required deliverables and an entry into the company’s applications database. These are pretty much the required bare minimums, which I call Minimum Viable Documentation.

By creating just the required documentation, we may be meeting the lowest bar, but any future transition (when I win the lottery and retire), is going to be just awful for the person who replaces me. Of course it’s only fair to reverse it and say if someone else wins the lottery (it’s always someone else, isn’t it?), then how much documentation do you hope they have? Personally I hope it’s a lot more than process templates and a record in a database.

Just outside of the minimum, yet still in the category of not meeting expectations, you may find historical requirements and a change log. It’s nice to have these things, and our projects and products really need to have this kind of information, but again, if someone handed it to me, it would not be a very successful transition.

Even apart from winning the lottery, lets say our project grows and the business decides to grant us with more developers next year. Our new helpers should be able to hit the ground running. If we’ve been skating by with the minimum because that’s all we needed, then we have failed our new teammates and need to catch up.

Simply Not Enough Documentation

On our scale of documentation, far to the left, we have the default, which is essentially the equivalent of no actual documentation. We’ve probably all been handed systems like this - heaven knows I sure have. Then again, in my pre-Boeing days, I’ve even created a couple – SHHH don’t tell!

The worst amount of documentation we can provide for any system in a developer hand-off is pretty much just the code. And unfortunately, it’s completely possible that many of us have --done-- seen this. If we were able to “accept no defects,” then we would just say “no thank-you.” Unfortunately however, we’re programmers living in the real world, and we don’t get to say “no” very often.

Enough Documentation to Win the Lottery

Surely some amount of documentation has to be enough to make a transition successful. For an active application, in order to hand off your work to someone else and move to an exotic beach island, what amount of documentation do you need to have already written?

An official transition may have us fill out some kind of information packet. The new developer needs all the file paths, various accounts, and server names. If there is an API, there should be documentation on it somewhere that they can find. This is where every automatic self-documenting tool will help, from Javadocs to Swagger, bring out those links.

Along with API documentation, our new developer needs to see the build and release process - whatever that looks like. For Rhubarb 2000, there is a small resource compile step in an Ant build file, some Git management, then batch files to push various branches to dev and test environments, followed by the overly-complex production release process that we have, you’ll be thankful I’m not going into it. That all needs to be written down somewhere. In addition to this, it will help to have some kind of guide to setting up an appropriate developer workstation - do I need a VM, what web server do I install, etc.

Any new developer must be able to run the unit test suite (and really, you should have some tests, really). That’s the success point on the technical transition - the developer can successfully execute the unit testing suite.

Once our new developer has all of those essentials, bring them into the project. Every application has some kind of ticketing workflow, sometimes as simple as emails, sometimes as complicated as Jira. Introduce them to it and explain how it works. Then introduce them to the operating rhythm. This is especially important for agile projects - if we do work in 3 week sprints, lay out the sprint and meeting schedule for them.

Also to note that at this stage of documentation maturity, we should have some form of organization for this documentation. It should be pretty easily discoverable by anyone who joins the project. For me, that means my project has a readme file with the code that points to the GitLab wiki and our Sharepoint site. Likewise, the GitLab wiki points to the Sharepoint, and the Sharepoint points to the GitLab wiki. It’s circular because we want new people to know that they’re in the right place.

After all of that, you can win the lottery or bring in a new hire and expect them to do pretty well, at least as far as the project goes.

Enough Documentation to Really Succeed

So let’s say we have a successful transition, and everything is in line for our new protégé to get going with the project. What’s next? What more could we do to nudge them into the pit of success? This could be more documentation than we really need, but not more than we should have. It’s above the average, and at least meeting some of these gets a thumbs-up in my book.

If we have software diagrams, that’s really helping the next developer. These are possibly the best thing we can pass on. Describing my mental model of the application using images passes on the real thoughts I have about it - my visual comprehension and perception of the application. Objects depicted as boxes and circles can be infinitely valuable later on. Consider the aspects of software that can be diagrammed - object models, big transaction areas where a lot of work is done, how we use MVC, how our services are wired together, usage of IoC containers, and how our data persistence works.

What if we create a physical architecture diagram with servers and developers and source control? That’s really getting there. Perhaps this is a handful of diagrams, one for each system we’re connected with, or one for each environment, or one for each contextual way to think about the application. Maybe we should just have one huge image of all the servers and systems and how they connect.

A database diagram would also be helpful. Some of these can be generated, especially when there are a lot of foreign key constraints, but that isn’t always the case, so try to draw the lines between columns and tables. This should point out the most central aspects of the application and give our new developer an idea of where to look for dangerous areas. As long as the data diagrams aren’t too large, I’ve seen this used a lot for field name lookups.

If we want new developers to keep up with the standards that the team has set, then write a style guide. Write one for code. Write one for visual design. Write one for standard patterns that should be used. I thought much of my work was obvious with the direction I was taking, but it turns out it was naïve of me to expect it without writing it all down.

The last thing for really succeeding is to have features documented for your users, not just your developers. Tailored documentation is helpful here, perhaps one document per role in your system, as well as documents for new features as they’re released. While our customers would love to see this in the Minimum Viable Documentation, realistically user documentation belongs way over here.

That’s my list of good documentation. If you attain this level, you should feel good about yourself.

More than Enough Documentation

Let’s talk stretch goals. What would be the ideal dream for the best possible documentation? This should be your stretch goal. This is what we all wish we could attain, and maybe can with a lot of work. It’s the final level of documentation bliss. Here’s what I mean.

Training screen casts are probably the best way to show new users (and developers) how to use our application. Clearly it’s hard to do a good job and keep this up to date, but those who are dead on selling the system can churn these out, usually only with a marketing budget.

I learned recently that new applications at my company are required to have a usability study. What that means is probably up for interpretation, especially at your company, but it’s good advice. Going above and beyond for your documentation would be to conduct a true study on the system’s usability, and keep it up to date.

Another great thing for documentation would be to have a real case study that explains real world benefits. We’ve got a system here where the team produced a short case study video of how successful they have been, and it’s made the rounds in the executive level, which has been a huge boost for the program.

Another goal at this level is to have a fully automated self-documentation engine that reads the code and keeps itself updated with the latest information about the application. This would be something a couple steps above Javadoc. The great thing about the auto-doc engine is that documentation on your APIs, objects, database tables and so on, are always up to date for you and your team to view.

The last thing I’ll recommend for your stretch goal is a technical writer maintaining all of your documentation. There are numerous benefits, but most notably would be the commonality and organization of all your documents regarding screenshots and writing styles, and also the fact that your documentation would be up to date for as long as they work with you. Keeping your documentation true and up to date is really what your goal is.

Wrapping Up

So you’ve seen my documentation chart. I encourage you to build your own and define what is important to you on your project or portfolio. I’m sure there are gaps and things I haven’t thought about. Why not bring them up in the comments?

(Discuss with Disqus!)

posted under category: Software Quality on March 21, 2016 by Nathan

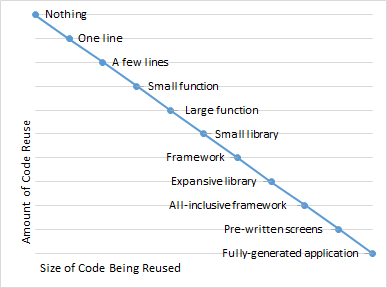

Something that comes up from time to time is the idea of reusable software, and why can’t we really reuse things that other people wrote. It’s a mystery. Robert Glass, a programming legend who worked at my company (1965-1982) said this:

- Reuse-in-the-small (libraries or subroutines) began nearly 50 years ago and is a well-solved problem.

- Reuse-in-the-large (components) remains a mostly unsolved problem, even though everyone agrees it is important and desirable.

Now we like to think we’re so much better off. Look at cave man. He can’t reuse software. He can’t even boil an egg. Look at us now. We have mechanical egg poachers and GitHub and NPM.

Using a library (jQuery, Bootstrap, React) is still “reuse-in-the-small.” Bigger reuse means more homogenization, and all programmers have not-invented-here syndrome to some degree that usually teeters between healthy and unhealthy. We like our own software because we know how it works, we know how to fix it, and it works exactly how we want it. We don’t like pre-written software that we can’t control.

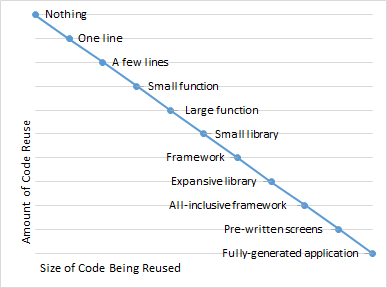

Living in the world of StackOverflow and GitHub, I believe that we can extrapolate Glass’s statements even further. It looks like this:

amount of code reuse by the size of code being shared

That’s why programmers lose their minds when they see something like Ruby on Rails that generates a working MVC app based on some database tables. Of course the reality ends up being something different, but you could almost hear the internet erupting with excitement back when Rails was first introduced.

Anyways, Glass’s proverb remains true. Code reuse in small parts is solved. Code reuse on a large scale is wanted but still far off.

(Discuss with Disqus!)

posted under category: Software Quality on December 21, 2012 by Nathan

This year (2012, for posterity sake) my project at work took on an Agile methodology. Why? 2011 was a productive year, we made a lot of people happy with our software, but we had about three giant software releases for the whole year. These changes being so far between meant I could never know if what I was doing was going to work for everyone, and it also meant that, while the software was better tested, when there were bugs, they would have to work around them for months until we had the fix out.

Accelerating our release cycle to every two weeks meant that even if something was broken, it wouldn't stay broken for long. It also meant we had to be on top of our tasks a lot more, with a stronger focus on what we are doing today and what we will be doing tomorrow.

How did we do it? We initially decided on twice-weekly scrum meetings, just to say what we did and what we were doing next. This wasn't enough for the project manager, so we bumped it up to 4 times per week (the 5th day we had a regularly scheduled customer meeting). We began the year by listing all of our customers and all of their existing and upcoming tasks, including our own nit-list (like upgrade the database version, wash the dishes, support the new manufacturing system, etc). This is our backlog. We worked with our customers to set a priority on each item, then planned a sprint strategy, taking tasks with the highest priority and mixing them with the most pressing deadlines. Throughout the year we tracked new business and requests into an issues log, promoted items from our backlog into workable sprint items, and we got a lot of things done that way.

We did learn some lessons though. First, daily scrum meetings are great, but when our customer gets involved (and he loves to be involved), he tends to ask questions mid-update, slowing the scrum to a crawl, turning what should be a 5 minute meeting into a 45 minute meeting. We should really cut that out. Also we have the need for a system that will take a customer feedback and track it through an official change request to a prioritized backlog item, to an in-work task, to a bullet point on our release notes. There are things that almost do this, but usually require something custom for the customer feedback part at the very least, and so few of them have been 'blessed' for use within my company. Also we have some release systems that do not play well with Agile. One system that notifies the help desk about outages and work in the area takes an hour to fill out each time we do a production release. Another system we may be forced to use requires a gated software version validation process that will take a day's worth of paperwork for each release.

The worst downside though is that it sucks my energy. I have to be on task a lot more, which has meant less blogging and less tweeting. That's sad for me.

In summary, twenty-five software releases on our two-week sprint cycle, less stress on the development, more pressure on the project manager, and happier customers. All-in-all, it has been a successful experiment that I think we will continue with.

(Discuss with Disqus!)

posted under category: Software Quality on March 13, 2012 by Nathan

A discussion erupted recently, on one of the various discussion groups i subscribe to, about deprecated features that are not really deprecated but more like, not encouraged when thinking about future applications moving forward in development time.

It's hard to explain in a definition, so a case in point is needed.

The Application.cfm file is a ColdFusion construct as old as I can remember. It's not going away, but it is essentially feature complete.

More recently, ColdFusion has given us Application.cfc. The capabilities that gives us are far above and beyond what a had previously, and developers are encouraged to upgrade their applications or at least use Application.cfc in their next projects.

In this case, Application.cfm has been socially deprecated.

Here's another one: Fusebox. It's not a language feature so much as a framework, but if your application uses Fusebox, your program is socially deprecated. Now my friend John is bringing it back, but for the past 5 years, if you have started a new app with Fusebox, you used a socially deprecated framework and your "cool kids" card has been revoked.

using socially deprecated features and frameworks puts your application at risk for falling into technical debt. It's a slow roll down a long hill that will show in bugs that are never fixed, areas of the application that everyone avoids because they are afraid of causing errors, and eventually, lack of knowledgeable developers which will leave you stranded.

The way to avoid social deprecation is to keep engaged with the developer community. Read the blogs, engage in the discussion groups and visit the user groups.

Can you think of anything else that's socially deprecated? I can think of a bunch of things. CFUpdate. Prototype.js. Old stylized code like capitalizing tag and attribute names. Php. Internet Explorer. CFPod. The list goes on and on.

(Discuss with Disqus!)

posted under category: Software Quality on September 9, 2011 by Nathan

Last July I gave my presentation, Holistic Program Quality and Technical Debt to the Denver CFUG, for my friend John Blayter. This is cool and stuff, but the truly impressive thing is that the audio and video is consistent all the way through, and the awful jokes were even relatively funny.

If you haven't seen it, this is the definitive version. This is the one to sit through.

Watch Holistic Program Quality and Technical Debt

Thanks, John, for getting it up there, even though I may have insulted your programming around the 14:30 mark. Yeah, I apologized, then pointed out exactly where I threw you under the bus. That just happened.

Update: You can hear my awesome kids screaming their heads off around 42:00. Fantastic.

(Discuss with Disqus!)

posted under category: Software Quality on July 1, 2011 by Nathan

This month it looks like I'm presenting Holistic Program Quality and Technical Debt (HPQaTD? Can someone pronounce this for me?), twice! If you haven't seen it yet, you have two chances in July.

Tuesday, July 12, for the Denver CFUG and my friend John Blayter. Read about it and RSVP at The Denver CFUG's Meetup site. It's at 5:30 PDT / MST, or 6:30 MDT in Colorado. John ran the CFUG in Phoenix for a few years, and actually was my boss at Interactive Sites until he moved to Colorado.

Thursday, July 14, for the Central Georgia CFUG and my friend Tim Cunningham. I have not met the user group manager Matt Abbott, but I warn you not to misspell his name. You can see the event and RSVP at The CGCFUG's Adobe Groups site. This one is at 3:30 PDT / MST, or 6:30 ET, Tim hooked me on to this as a trade in services for his presentation on Git at the June Phoenix CFUG.

Both of these are remote presentations. If you want the address, you should follow me on twitter, where I tend to post only the most important subjects, and links to cool speeches.

Thanks for the interest in my talk!

(Discuss with Disqus!)

posted under category: Software Quality on May 14, 2011 by Nathan

A number of peope asked to have my presentation slides from my talk on Holistic Program Quality and Technical Debt, so here they are.

Holistic_Program_Quality_and_Technical_Debt_CFObjective_2011.pdf

And here are a few sample slides:

Thanks to everyone who came, especially those I pestered into coming. I really do appreciate it.

(Discuss with Disqus!)

posted under category: Software Quality on April 12, 2011 by Nathan

We left the easy list of things to do that will help you pay down your technical debt, but left off with refactoring. If you wanted a nice, vague discussion, you could read Wikipedia on Code Refactoring, but you won't find anything you can take home. It would be easier reading the definition, which just quotes the only important part of the Wikipedia article:

Code refactoring is the process of changing a computer program's source code without modifying its external functional behavior in order to improve some of the nonfunctional attributes of the software

So how do you refactor? There are three places that I see it.

First, if you obeyed the golden rule of technical debt, you are keeping track of your loans. Refactoring in this sense is nothing more than finding those areas in your application and working in what needs to be done. It's easy, which is why you need to follow that golden rule. The huge advantage here is that you can put estimates on it and schedule it into your project. This is really more like a budgeted technical debt payment, but it can still fit the refactoring label.

Another path to refactoring is to overcome your knowledge gap. If you wrote a program, you probably did it as well as you could — minus the gaps you know about, but the gaps you don't know about are where your best improvements lie. That's where true refactoring comes in.

I was talking about this the other day with subversively fixing technical debt: reading a book is the best time to come up with new ideas. I guess it's obvious what books I read. The design patterns book was especially great for this. Reading along to tech books sparks all kinds of new ideas. More often, they are unrelated to the content I am reading, not always.

Once you learn what your gap is, and you visualize how to fix it, there is only a matter of time before you get to see it through. This process is why I believe that if you want to have refactoring improvements in your code, (1) you have to be learning, and (2) they almost always come organically.

These organic enhancements like to start deep down in my thinking. Often I read something that produces a small shift in what a good program should look like. Eventually that idea turns into something I can do within my application, like introduce a new kind of object in just the right spot, break a form down into reusable parts, or develop a useful independent API that makes everything feel better. One day I eventually work up a physical understanding of the code that needs to change. I introduce the modification in a safe development branch, then I see if it fits. It usually does, but if not, I roll it back, no damage done.

The last kind of refactoring is incidental refactoring. I do this all the time.

Often while working one programming task, I find ways to enhance things that we never knew needed enhancements. These tend to be little things. Whenever I am in one area of an application I take ownership in, I look around to see if there is anything else I can improve.

Last Friday, while working on one feature, I noticed a block element that needed some extra margin spacing. A tiny refactoring that people won't notice, but will make a difference to the overall feeling of quality. Later, while removing some inline javascript, I found some unused code which led to an entire unused feature. That allowed me to delete a template, some view wiring and a controller. Less code means less to maintain. All that, and I just stumbled onto it.

Incidental refactoring is the best. It keeps a system fresh, it fixes those little bugs and near-bugs, it is the driving force to cleaning up ugly code, and it makes customers happy.

Incidental refactoring does not happen all the time. There is a single way that I know to produce it, and that way is ownership. You don't plant a tree in front of someone else's house unless they ask you to. You probably wouldn't rewrite a funny loop in a program you have just been introduced to unless you were tasked with it (although I have met a few brazen enough). When you have ownership of an application, you check in capitalizations and misspelled variables. Ownership means caring because the application's customers are your customers, the application's success is your success.

Refactoring is an ongoing process. You work at it and you figure something else out, you think about it more and something new comes to mind. Refactoring is a process, not a task. It's a lifestyle not a goal.

(Discuss with Disqus!)

posted under category: Software Quality on April 11, 2011 by Nathan

I realize a lot of my technical debt blog writing has been all talk. That's fine, but boring. What actual, practical thing can you do now - today - that pays down your technical debt?

(By the way, I'm talking about this stuff at CF.Objective() 2011, May 12-14 in Minneapolis. It's a month away so right now is the time to book your tickets. Come meet me and see my presentation Holistic Program Quality and Technical Debt!)

Number one, most important and easiest win - make your code readable. It's debatable if ugly code is actually any kind of technical debt. You didn't take out a loan to not indent a javascript file. You just had a sloppy coder who somehow learned to do it wrong. On the other hand, if technical debt is the gap between bad and good, you can put it in the debt pile. The great news is that you can make it readable as you go, and you can even automate code formatting. Easy win.

Going deeper into code readability, you can take it beyond tabs and line breaks. Commenting and naming are as important. Comment strategies can vary per team, but decide on a preference. Components, functions, files and variables should all have obvious names, not too short, always consistent. Again, standardize it with the team. You can alleviate almost all of the stress of working with a complex system simply by making the code readable.

I hate to say this. It's 2011, I shouldn't have to. If you don't have source control, you have done yourself a ginormous disservice. If you are working on your own, you will love what a source control system will do for you. If you work on a team without it, you are handicapping every aspect of the team's ability. If your boss tells you "no," tell your boss "yes."

I hate to say this. It's 2011, I shouldn't have to. If you don't have source control, you have done yourself a ginormous disservice. If you are working on your own, you will love what a source control system will do for you. If you work on a team without it, you are handicapping every aspect of the team's ability. If your boss tells you "no," tell your boss "yes."

The next practical thing is to make a build process. You do this because when you document a process, everyone can know how it works, and when you automate a process, you make it work right every time. A build process documents and automates at the same time - big and instant win for quality. You also do this so that you can add unit testing, then eventually continuous integration. I like Ant for my build processes; the great community support makes it worthwhile. Start it off simple and stick to it. There are ways to sell Ant to the team above the intellectual reasons, like the jsMin task that compresses all your javascript when you produce a build. Fancy!

Beyond this lies the territory of refactoring, which encompasses everything larger than changing variable names and line breaks. I'm talking about practical refactoring next time. Stay tuned.

(Discuss with Disqus!)

posted under category: Software Quality on April 9, 2011 by Nathan

I have been talking about my friend, who was in a technical-debt-pickle when he called me. Something else he mentioned over the phone was that the owners of his giant application were not keen on paying for anything but features. It's not surprising.

In an agile project, work is done in sprints 1-3 weeks in length. Teams set goals for features they can accomplish in that time. When application customers hear about a refactoring sprint, it goes over like a lead balloon. "You want to work for 2 weeks and not add features?" Like Oracle and open source, they just don't get it.

So what do you do when you can't get a budget for fixing the last decade of technical debt build-up?

I gave him my best advice, and some of my points leaned toward subverting the wishes of uninformed management and customers. Without putting your job in jeopardy, I think this is something you have to do sometimes. But how do you do it? How do you pay down some of the technical debt principal when managers say no?

First, you need to have the technical debt conversation with the customers & owners. Help them realize that by allowing this debt to live on, they are perpetrating higher interest payments in IT man hours. No sane management group would deny you the time you need, however, I know that a lot of companies are run by the most insane people.

Shot down or passed over from there, you have to pad estimates. You have been estimating high anyways because of those technical debt interest payments where it takes half of your time trying not to break other modules, finding the right files to copy and paste, or trying to remember how to use the terrible API left by some former employee. Pad higher and take that extra time to fix some of the bigger problems.

I find that, when I get myself fully in to a program, where I can feel it breathe, I start to dream about it. It's is a good thing. All my best system thinking tends to happen while I'm reading a book. Offline, I discover the pattern and identify new objects that will help me jump that next hurdle. At work the next day, I merely have to type what I envisioned.

Refactoring doesn't take a lot of time, it takes better thinking. A little padding and some daydreams will get you there.

You may be able to add this padding to each release cycle, agile or not. Call it unit testing. Call it the software release time, call it the cycle preparation time, call it quality insurance. No reason to lie about it being there. Also, no reason to share the gritty details of what's being done.

With limited refactoring time, it's ok if a refactor project is stalled halfway through. When I leave one and pick it up again, I find that I have newer, better ideas.

Going back to the people you report to, I would point out my review of my friend Terrence Ryan's book Driving Technical Change, which really seems to fit the bill here.

The final way to subvert the authority for the betterment of all, and goes along with the golden rule of technical debt (keep it visible!), is to bring it up, often. It's easy to ignore a dog waiting patiently by the door, but when it keeps yapping, you'll want to let it out. It's easy to say no to some engineers who want to run an expensive project, but if they just won't shut up about it, it becomes easier to say yes. Once, my team begged the CEO of our small software company to rewrite an app. Then we begged some more. Then we begged some more. We pointed out problems, we started to shout it from the cube-top, and eventually, he gave in. It works.

I don't think this list is exhaustive by any means. Does anyone want to share their experience or ideas?

(Discuss with Disqus!)

posted under category: Software Quality on April 2, 2011 by Nathan

So I was telling this story about my friend, but I was distracted by the thought of avoiding technical debt, or actually how you shouldn't. Then I completely lost focus and talked about how technical debt isn't really the bad guy; fancy cars are.

Back to the story though. My friend was telling me about his situation, and his application, but more than that, I began to feel something coming through the phone that I didn't expect. It's the change in voice someone begins to make when they are up to their shoulders in tar and they aren't sure how much longer they can hold out. I hoped I could help him.

My friend told me about this monolithic application, a company staple, the most important program in their world. It did everything for everyone. No customer was ever declined. No marketing directive was ever shot down. No timeline was ever exceeded.

Guess what comes out of that kind of meat grinder?

Dozens of developers piling feature upon feature over an old site with tight deadlines and owners who don't understand programming, delivers spaghetti almost every time. Maybe even mashed potatoes, if there is such a thing in programming terms. This is an incubator for technical debt.

So, true to my style, I unloaded everything I could think of about technical debt, like a verbal shotgun. It was 8 months of technical debt knowledge dropped into 3 minutes of monologue. Here are the big takeaways I tried to leave him with:

First, understand technical debt. You are drowning in technical debt interest payments.

Technical debt may be the problem, but more important, technical debt is the conversation. The conversation flows up to your boss and their boss. This conversation is what can bring change back down to you.

You have to refactor. The longer you go, the more you have to do it and the bigger it has to be.

If you can't get time to refactor, you have to take that time. It is part of your job, it has to be. I'll talk more about that later.

Last on this list, remember the golden rule of technical debt: make it visible. Write it where you can see it.

It takes a champion to do what my friend needs to do. I hope he can do it.

(Discuss with Disqus!)

posted under category: Software Quality on March 30, 2011 by Nathan

So I was trying to tell the story of my friend who called me up a few weeks back. I got distracted by the question of should you even try to avoid technical debt. Like I said, he has a lot of technical debt.

Remember that technical debt is a way of describing certain problems in programming.

See, there I get stuck on another question: Is technical debt even bad? Is it really just problems? I mean, it is, right? It represents a hole in our program that needs to be filled. The truth is, technical debt isn't the problem, your application is the problem. Technical debt is just a metaphor.

Of course no metaphor would be so damaging if it were only a figure of speech. The phrase "technical debt" is actually very valuable. The real strength lies in its ability to cross boundaries, into management and ownership and finance.

Back in the land of nerds where I dwell, where technical debt has a face, it describes a gap, not necessarily a problem. It's the distance between a perfect application and an app that basically mostly sort of kind of works. Debt is the effort that will get me where I want to be.

My first car was a 1989 Geo Metro. Stick with me here.

I wanted a Lamborghini. I got a Metro. The Metro got me across town, and those 3 cylinders had to work overtime, but there was a noticeable gap between my Metro and the V12 I was hoping for.

Will I ever get that Lamborghini? I don't want to make the effort to come up with those payments, and I don't really need to go 0-60 in 3 seconds - I can't imagine what good that would do.

Will I ever get my application perfected? I don't want to put in the untold hours and make the sacrifice to remove every ounce of debt from my system, and it's ok if there's room for improvement - I can't imagine not having a little room.

Maybe the real goal should be something in the middle, where it's affordable and has enough cup holders. Every application is different, and every working environment is different, but almost none of us are going to get that Lamborghini. My friends, make your applications a Camry or an Explorer. Something that's nice but not impossible.

I'll try to pick up the conversation with my friend next time.

(Discuss with Disqus!)

posted under category: Software Quality on March 17, 2011 by Nathan

The title of this post pretty much speaks for itself. Dave interviewed me for my upcoming CF.Objective() presentation in May. If you were wondering what "Holistic Program Quality and Technical Debt" is all about, this is an easy way to find out.

You can listen to the interview online - my interview starts around 59:40 and goes through the end of the show (about 24 minutes).

Of course, if you are into CF and programming, you should subscribe to the whole thing and listen regularly.

(Discuss with Disqus!)

posted under category: Software Quality on February 16, 2011 by Nathan

I had a phone call a couple weeks ago with a good friend who was chest-high in technical debt. I was thinking of writing about how to avoid technical debt, but instead I found myself questioning if you should avoid technical debt.

Oh, that may not be a familiar term. I wrote about it last year, but the basics are like this: technical debt is a way of explaining how some problems in software development have a serious monetary drawback. Like any debt, it's usually something you take on by a couple of decisions, and if you're not careful, you end up bankrupt. Most money terms apply here, all puns are usually intended.

So the question comes to me, should we even avoid technical debt? Is it strange that the answer is a definite "No?"

Backing up, we need to really define technical debt. That takes us to the discussion between Robert "Uncle Bob" Martin and Martin Fowler. Uncle Bob says A mess is not a technical debt and Martin Fowler rebuttes with the technical debt quadrant. I think that Mr. Fowler has it right, especially when he reminds us that it's a metaphor, and it applies to both intentional and unintentional debtors.

When I realized that technical debt is just a metaphor, it becomes obvious that it isn't a villain. You can choose to take on technical debt. In fact, many of us do it, but maybe we don't realize it. We do it when we cut corners. We do it when we make our navigation static even though we know it should have been dynamic. We do it when the budget gets in the way of our architecture. We do it when we let our customers or managers push us around about adding features and we don't push back on refactoring. We do it when we know we'll lose a job if we bid too high. Sometimes it's just necessary. Either we take on technical debt, or we feed our kids ramen for a month.

Avoiding all technical debt is not an option. Reducing the amount you take on can be done by refactoring as you go, but sooner or later you will have to do it. When that time comes, don't shy away! Cut that corner! Make the customer happy! Feed your family! Just make sure you follow the golden rule of technical debt:

Keep Your Technical Debt Visible

It should written on the whiteboard. It should be high priority in your bug tracker. It should come up in meetings often. It should be in your email signature. (Ok maybe not that last one.) If you keep it in sight, your management will hear and know about it. Your customers will know about it. It will stay near the top of the to-do list until it's fixed. As soon as you lose sight of it, everyone else will be eager to forget it. You will never be able to squeeze it in without a lot of pain. When you keep it visible, you will keep yourself sane.

(Discuss with Disqus!)

posted under category: Software Quality on September 2, 2010 by Nathan

A couple weeks ago, I had the honor of doing a presentation for the Philly CFUG. The subject was about as dry as I could think to make it, but I think it went off pretty well. Being self-critical after listening to it, I have a pretty flat, monotonous voice, but otherwise, the content is good, IMNSHO.

Here's a link to the preso (thanks Adam): Holistic Program Quality and Technical Debt

(Discuss with Disqus!)

posted under category: Software Quality on May 25, 2010 by Nathan

Technical Debt has been talked about a lot, but I have been thinking about pretty hard lately. Here's the concept: Make economic analogies for application development to help you (and especially your boss) understand code quality.

Let me lay it down so you can pick it up.

When you write code, you're making a monetary investment, be it in your own time or knowledge, there's been money spent. If it's not literal money, suspend your disbelief for a second when we pretend that your work is worth some arbitrary amount of cash. There's an amount you should spend to complete a given application, especially to do it right.

Now, when you take a shortcut in your programming, you save money up front. You can finish faster, but you're borrowing time, thus borrowing money. Eventually, that money has to be paid back, and you will pay it back.

Paying back technical debt means refactoring the application to undo those coding shortcuts, adding unit tests, making the application maintainable and adhering to best practices. The closer you are to the time you acquired the debt, the easier it is to pay it off. Think of credit cards; pay those off at the end of the month or else you accumulate interest.

Oh, and it's the interest payments that will really kill you.

Until you pay off that technical debt, every time you touch the application, you have to pay interest. In the past, you borrowed time from the future. Now the cruft in the code makes any subsequent task take longer. That is your interest payment. It comes off the top and you never pay it off until you remove the crufty code.

Code with high technical debt tends to be brittle. One change means everything breaks, so we spend our time being extra careful, or by accidentally creating a wide range of errors across the application. Interest makes programming harder, and harder is not what we need.

(Discuss with Disqus!)

posted under category: Software Quality on February 20, 2009 by Nathan

I have this open-source toy project I've been playing on, SQL Surfer. It's a little web-based SQL IDE with an amazing build process. How could I not share it with you?

The idea was pretty simple. When I work on my program, I want to see it like a normal program, multiple files, separate CSS and JS, included cfms, conditionals based on the server version, but when I release it, I want it in one, single file: easy to distribute, easy to throw on a server, no install, no folder structure to preserve, no dependencies to remember. It seems that basically no one has ever done this, but it's not too difficult.

The way it works, is, when I get to a point of wanting to make a release, I just run the Ant build file. One click. Ant does what it does best, preps the build, cleans out the output folder, in the end, it zips the results and whatnot, but I couldn't pull off the dynamic file embedding with plain Ant XML, so that's where Groovy comes in. I've got Groovy searching for included files (cfinclude, script, style, etc) and embedding the files in place of including them. Plus, while I was mashing files together, it seemed logical to compress them, even if just a little.

The result is a mashup of my entire application, nearly obfuscated into a single, gigantic, single-file, working application.

Actually, it goes a little further still. I wanted to have a version to take advantage of ColdFusion 8's new <cfdbinfo> tag, which, by the way, is awesome, but I also wanted to stay compatible with CF 6 and 7, even with a lesser experience. When you type a new CF tag into an older version, you get a compile error. That makes it hard to develop and hard to release. My solution was to drop in <cfif left(server.ColdFusion.productVersion,1) GTE 8>, followed by a cfinclude, css or js reference, then, Ant makes 2 files for 2 releases and my Groovy build file has 2 functions, removeCF8 for removing the if blocks, and removeIfCF8 for just the if statements and embedding the files.

The actual result is 2 files, all mashed up and working perfectly for different server versions.

Going overboard, I also embed EditArea, for the code editor. Of course EditArea doesn't care about an all-in-one-file project. I use Groovy to insert EditArea into the main file as well as change EditArea itself, at build time, to point to the embedded parts in the CF application instead of its own directory structure.

Taking it beyond reality, I realize now that I could probably include a framework like Barney Boisvert's excellent FB3 Lite, and then potentially images in b64. The only challenge that this approach leaves me with is CFCs. I love my objects, but I haven't figured out how exactly to embed them.

Ouch. Even just typing that last sentence makes my head explode with ideas. Taking it way too far, I could probably embed the components I use onto the main file, stripping out the cfcomponent tags, then to recreate them, create instances of the generic coldfusion component, WEB-INF.cftags.component, and re-constitute them with duck typing and method injection... Yeah, maybe some day, if I get really into it.

You can check out the source code right now and compile it yourself, but to hear about it first hand, visit the AZCFUG Feb meeting, 2/25/09, where I'll be showing it off. If you miss it, don't sweat it, I'll have the slides out by the next day. I'm undecided on having a connect meeting for it. If we choose to, I'll post it up here.

(Discuss with Disqus!)

posted under category: Software Quality on September 6, 2008 by Nathan

Selenium is an open source, simple web site testing and automation platform. I say it's a "platform" because it can't really be classified as just a tool, a language, API or a full application, but rather to a degree, all of these.

The basic idea is to create, using very plain, easy HTML, a repeatable script that is then executed by a Selenium runner.

I recommend using the Selenium IDE to create your first script. It's an add-on to IE and Firefox that records your clicks. Save it to a file (it generates plain HTML) and you can repeat it later on. It won't always work perfectly, however, so you can edit it with any text or HTML editor. You just need a Selenium language reference to get by.

Selenium actions and accessors are the language you program your scripts in. Generally, you would use the click action, type to enter values into forms and things like assertText to make sure certain content exists.

One part of using the language you will have trouble with is selecting elements. Hopefully, you use uniquely named text links everywhere. If not, Selenium supports XPath very nicely. For me, it's a matter of sprucing up my XPath skills, something I wanted to do anyway.

Selenium scripts consist of a table with three columns. The first column is the action to perform. The second column is like the first argument of a function, usually this is the item to perform the action on and is almost always used. The third column is like the second argument in a function, usually the content of the action like the text to enter into the form item from the second arg. This column is not always used.

Selenium scripts must run within your web browser, however, different tools to automate your browser exist:

The Selenium IDE will run a file off your disk. This is perfect for single scripts and quick browser automation.

Selenium Core is a javascript application that runs on your web site - put it on your dev/QA site. Being a javascript application, there is no server-side interaction and nothing to install. This method requires your scripts to live on the local web site as well (browser security). This is actually very natural feeling, as then you can check them into your source control (you HAVE source control, right?).

(jump break)

Selenium RC (remote control) allows you to control tests from a remote browser on a different server. It even allows you to take screenshots. I haven't used this yet.

If you are testing a large application, you should make a test suite. It's a simple HTML document that links to your different test cases. With Selenium Core, you can load your suite and run all your cases in order. It will go through each one and give you a pass/fail summary like any good QA tool.

You can also use Selenium for easy web automation. I used it, for example, to automate the steps needed to log into a timer system and enter my daily time.

Selenium goes much deeper than this, but my blog post doesn't. Read up about it on the OpenQA web site.

(Discuss with Disqus!)

I hate to say this. It's 2011, I shouldn't have to. If you don't have source control, you have done yourself a ginormous disservice. If you are working on your own, you will love what a source control system will do for you. If you work on a team without it, you are handicapping every aspect of the team's ability. If your boss tells you "no," tell your boss "yes."

I hate to say this. It's 2011, I shouldn't have to. If you don't have source control, you have done yourself a ginormous disservice. If you are working on your own, you will love what a source control system will do for you. If you work on a team without it, you are handicapping every aspect of the team's ability. If your boss tells you "no," tell your boss "yes."